Retrieval augmented generation (RAG) is a means to combine a large language model with an external knowledge base to obtain more accurate, higher-quality domain-specific answers.

I read a lot about RAG lately, so it was time to put what I was learning to good use. I had the perfect use case: Something modest enough for me to implement, large enough to serve as a credible demonstration and, well, useful enough to make it worth my while to build it in the first place.

Over the years, I wrote over 11,000 answers on Quora, the vast majority of them on the subject of theoretical physics, mostly gravity, quantum field theory, cosmology, and the basic fundamentals. What if, I asked, I can organize my Quora answers into a suitable database for use in a custom RAG solution?

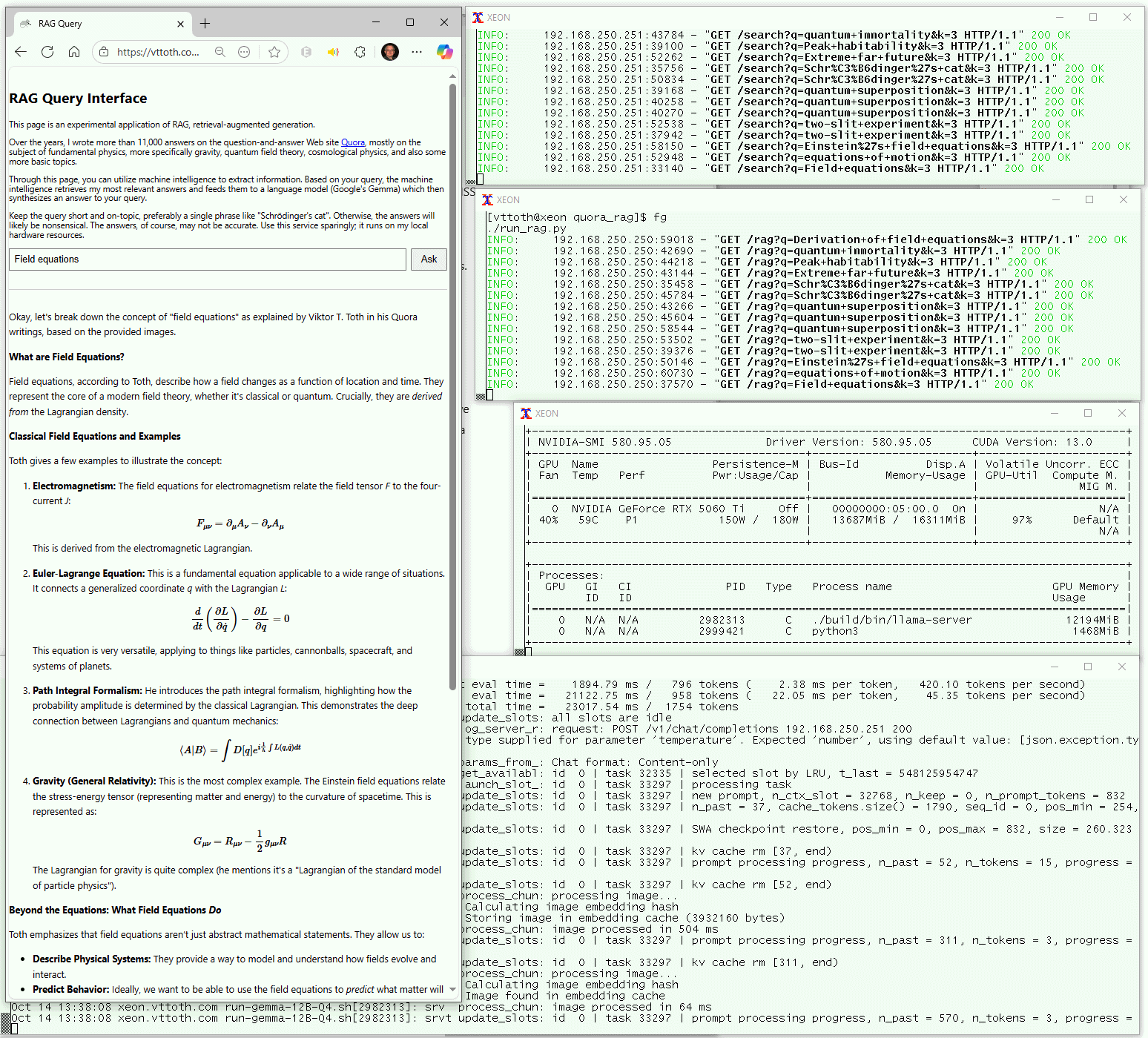

Long story short, that is exactly what I have done. Here is how the basic logic works.

First, I had my Quora answers downloaded. Quora is quite helpful in this regard: You can request a copy of all your content, and within a day, you receive a set of e-mails containing a number of ZIP files with all your content in HTML form. The first step was to write a fairly trivial script that would extract individual answers from these monster HTML index files, and file them in a database.

Next comes the tricky part. How do you build a database that allows for context-aware retrieval of relevant documents? And by context-aware, I don't just mean that it has the right keywords. No, it's something much deeper: documents with relevant semantic content.

In comes a concept called cosine similarity. How similar are two vectors in a multidimensional vector space? Well, calculate the cosine of the angle between them. If the two vectors coincide, the cosine is 1. If they are orthogonal, the cosine is zero. The larger the value, the more similar the vectors.

As to what those vectors are, one possible answer comes in the form of FAISS: Facebook AI Similarity Search. FAISS is a library that was developed by Facebook AI, allowing us to represent text in the form of an embedding as abstract vectors and, you guessed it, use cosine similarity to find related items.

With these building blocks at hand, I was ready to put together my RAG service. Well, almost. There was a catch: Not all, but many of my answers contained formatted equations, and some contained diagrams. Equations come across poorly in raw text; diagrams, not at all. Fortunately, many of the more recent language models, including the one I intended to use, are multimodal, "text+vision" models: That is to say, that can accept, as input, not just plain text but also images. And they are sufficiently sophisticated to be able to "read" text, including equations and diagrams.

This, then, defined my pipeline. The main steps:

- Extract Quora answers into a database: both plain text and formatted HTML, along with the question.

- Run a process to build a FAISS embedding index. I opted to use a model called BGE for this purpose; this choice was recommended by ChatGPT in a technical discussion. The model can be obtained from the Hugging Face repo under an MIT license, along with Python software tools to run the model and process data. On a GPU, the processing was very efficient: my answers up to 2024, close to 11,000 of them, were processed in less than 6 minutes.

- Build a simple search service that takes a query string and returns a set number (top-k) of the most relevant documents, or rather, their unique IDs from the database of Quora answers.

- Build another service that takes a query string, runs it against the search service, then retrieves the actual Quora answers. Now comes the tricky part: Using a browser engine, the service then renders the actual answers as Web pages and saves them as raster (PNG) images. The user's query, together with a suitable crafted system prompt and the PNG images, is then sent to the model.

- The model itself also runs locally. I am using Gemma-12B, Google's open source language model. Its 12-billion parameter version, quantized to 4-bits, fits comfortably within the video memory of my 16 GB GPU, leaving enough room for the FAISS engine.

And.. we are done. I now have a functional, dare I say useful, RAG service that allows the user to query my Quora answers on any topic. Needless to say, the topic has to be related to my writing; if you ask the model about the Sanskrit language, it's unlikely that my answers will be of any help. But ask it about a hardcore physics topic, say, Einstein's field equations, and you will see a meaningful answer.

I admit I am sometimes mesmerized as I see the interchange between the various components (dare I say "microservices?") that, choreographed together, produce meaningful results.

The best part? Even if I include the run-once script that generates the FAISS database, it took barely more than 200 lines of well-crafted Python code to make it all happen.